Blog Posts

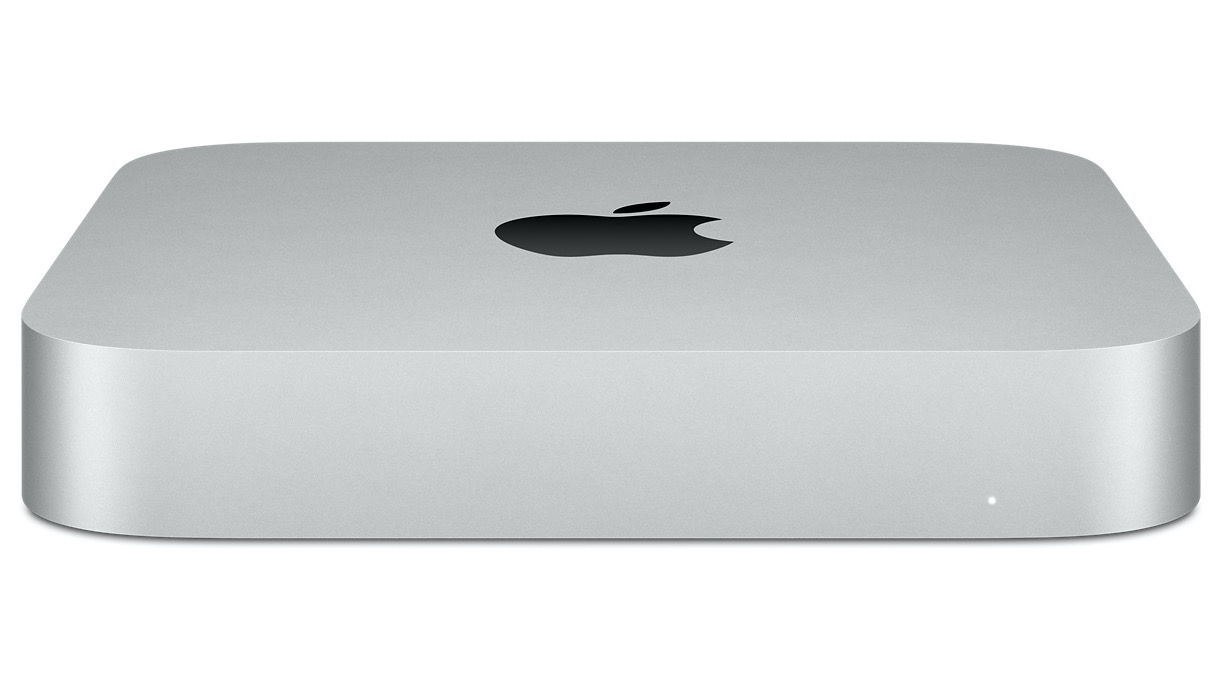

We had a mishap with our Mac Mini M1 server over the weekend of 11/20/2021 - 11/21/2021 and experienced the first extended downtime with this server. Fortunately, it wasn't a serious issue, but since it was over the weekend and it essentially disrupted any email contact, it lasted much ...

This is just a quick look at a process we run for checking on Northern Lights activity, The gist of it is we download a file from a NOAA site, convert it from JSON to CSV, do a quick check to make sure the data is new (dtCreated and dtValid), then load it into the database. The NOAA file has 65,160 records with 5 fields ...

From https://www.weforum.org/agenda/2021/06/remote-workers-burnout-covid-microsoft-survey/

A hybrid blend of in-person and remote work could help maintain a sense of balance – but bosses need to do more. More than half of 18 to 25 year-olds in the workforce are considering quitting their job. ...

Yesterday, 06/21/2021, I did a quick road trip from Indiana to Wisconsin to retrieve an older Mac Mini Server that has been providing website, database and email service for the websites Custom Visuals manages. The older one was a 2012 model that was built in March of 2014 and deployed in June of the same year. ...

We check for different types of attacks on our colocated server and ban the offending IP addresses for increasing amounts of time as the attacks continue. For instance, let’s say you’ve been trying to guess VPN accounts, which each generate an error. If you cross a threshold of say, 10 attempts, the IP address will be firewalled for a while. Once that time is up, the IP address can then access the ...

As someone who works remotely 100%, I like seeing these surveys and reports that show how many others are doing the same and how it fits their lifestyle.

I have a very nice home office above our garage with a view of our beautiful neighborhood. This place looks like it's straight out of a picture book throughout the seasons. I occasionally take my laptop on the road to a park or Lake Michigan ...

Zoffix provides a relevant discussion on the Perl vs. Rakudo name for the not exactly a successor to Perl 5 language at http://blogs.perl.org/users/zoffix_znet/2017/07/the-hot-new-language-named-rakudo.html.

I’ve been in the “rename it” camp for a year, not that I’ve shared that with anyone ...

When Custom Visuals started in 1995 (as Custom Data Visualizations), we were working on projects for individual companies with little emphasis on long-term projects utilizing our own servers. That all changed when an environmental company requested a web scraping application for a budget estimation project.

The project started off as a simple request to scrape a few dozen pages from a site ...

Do some industries use one type more than others?

There are a lot of terms used that describe essentially the same process of automatically getting or sending data to or from a remote site. The most common phrase is software automation, but you’ll also hear software bot, software agent, virtual robot, or just bot, all referring to the same concept. And it’s a concept Custom Visuals uses all ...

If this blog describes you or your business, please click our Contact page and let my company help out.

As you may know, Custom Visuals automates as much as we possibly can as part of our core business. We have a couple thousand unattended tasks running on our servers (co-located and in-house) on behalf of our clients throughout the day (not including the tasks the operating system runs on ...