Web Access Errors not What I Suspected

Introduction

As a server administrator running a Mac Mini M1 as a colocated server, I manage my small business website and a handful of others using Apache. Recently, I encountered an issue that threw me for a loop: excessive errors flooding my web server logs. At first, I suspected a hack due to the sheer volume of alerts, but it turned out to be a misbehaving web crawler—Amazonbot. This blog post walks through my journey of identifying and resolving this issue, offering insights into log analysis, script-based monitoring, and even a bit of vendor communication. If you’re a mid-level developer or server admin, I hope you’ll find practical takeaways here for handling similar challenges.

Problem Discovery

The trouble started when my monitoring system began highlighting alerts about an unusually high number of errors in the Apache web server logs. I’ve set up this system to flag potential issues like spam attempts or authentication failures, but this time, the errors were relentless—hitting every few seconds. When the errors exceed a threshold, the email notices change colors in my inbox. And I was seeing a lot of brightly colored messages. The specific message in the logs was "file name too long," which immediately set off alarm bells. Was this an exploit trying to overwhelm my server with oversized file paths? The frequency and nature of the errors pointed to something serious, so I dove in to investigate.

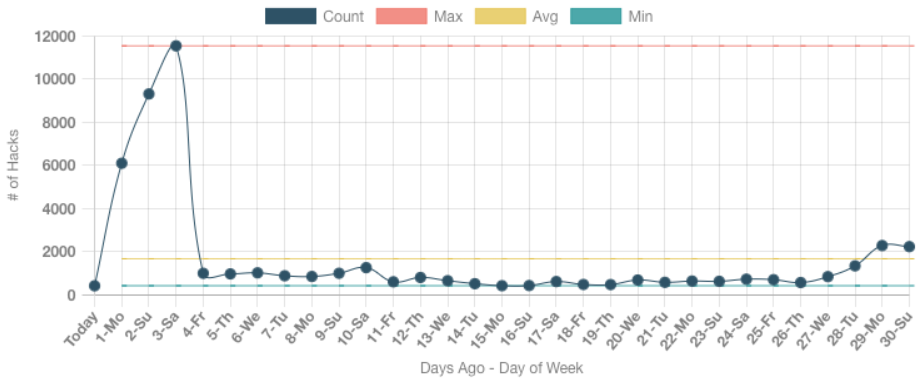

Here is a graph indicating the errors piling up, then getting resolved in a couple days. I wasn't recording these errors in the graph until I updated the program described below, but you can see the impact it was having.

This is the "Hack Attempts per Day" graph, which comes from the Services -> Processing section on my site.

Investigation

My first step was to get eyes on the problem in real-time. I opened a terminal and ran tail -f error_log—a handy trick for watching log entries as they roll in, especially when errors are showing up constantly. Btw, error_log and access_log are just shortcuts for the actual names for the blog, they have much uglier names in real life. Sure enough, the error log was a flurry of activity compared to its regular mode. Each line included a datetime, an IP address, and the "File name too long" message, confirming the issue was ongoing and consistent.

For context, here are sample log entries that captured the issue, occurring about every 4 seconds (23,000+ entries for the day shown). First, the error log:

[Sat Mar 22 23:59:51.672069 2025] [core:error] [pid 9958] (63)File name too long: [client 44.221.105.234:25481] AH00127: Cannot map GET /services/websites/index.php/contact/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/about/faq/files/files/services/consulting/blog/files/files/blog/files/files/about/blog/contact/blog/blog/about/files/about/files/about/about/contact/about/files/faq/about/files/contact/files/contact/files/files/contact/about/services/files/image_256-348.png HTTP/1.1 to fileAnd the access log:

44.221.105.234 - - [22/Mar/2025:23:59:51 -0500] "GET /services/websites/index.php/contact/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/files/about/faq/files/files/services/consulting/blog/files/files/blog/files/files/about/blog/contact/blog/blog/about/files/about/files/about/about/contact/about/files/faq/about/files/contact/files/contact/files/files/contact/about/services/files/image_256-348.png HTTP/1.1" 403 199 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Amazonbot/0.1; +https://developer.amazon.com/support/amazonbot) Chrome/119.0.6045.214 Safari/537.36"

To state the obvious, that path does not exist on my server. However, the file does exist at /services/websites/files/image_256-348.png within that domain.

As I was looking at the requests that were triggering these errors, I spotted the culprit: the client was identifying itself as "Amazonbot/0.1"—Amazon’s web crawler. The bizarre requests had URLs with paths that repeated the segment "/files" around 100 times. How the block of 100+ repeats got in there is anyone's guess.

These requests were met with 403 forbidden responses, meaning Apache was rejecting them due to the absurdly long paths. I was still convinced this was a hack, pretending to be a well-known bot from Amazon. So, I checked the host to see where it was really coming from. Much to my surprise, it was actually Amazon.

$ host 44.221.105.234

234.105.221.44.in-addr.arpa domain name pointer 44-221-105-234.crawl.amazonbot.amazon.

Relaxing a bit now, this wasn’t a hack but a bot gone haywire—likely a bug or misconfiguration in Amazonbot’s crawling logic.

Oddly enough, there were a lot of bots scanning a specific domain. I ran a few shell commands to isolate the IP address and give me a count of how many bots were poking away and how often they were running.

cat error_log | grep 'File name too long:' | cut -d' ' -f14 | cut -d: -f1 | sort -h | uniq -c | sort -nr

In short this says, look for every line in the log file with 'File name too long:', cut the IP address out of the line, cut the port off the IP address, sort like a human (dotted quad IP addresses aren't exactly numeric), count the number of times you see each unique IP address, sort the highest counts on top.

This gave me 433 lines, with the top 5 looking like this:

58 52.204.71.8

51 52.45.77.169

49 98.82.66.172

49 34.226.89.140

49 34.202.88.37

As noted above, the total number of these lines was over 23,000 for the day.

To analyze the logs systematically, I wrote a regular expression to extract key details—datetime and IP address—from the error log entries. Stripping most of the repeated /files block from the error log, the line looks like this:

[Sat Mar 22 23:59:51.672069 2025] [core:error] [pid 9958] (63)File name too long: [client 44.221.105.234:25481] AH00127: Cannot map GET /services/websites/.../files/image_256-348.png HTTP/1.1 to file

And the regex for what I need looks like this:

$line =~ /(\S{3}).(\d{2}).(\d{2}.\d{2}.\d{2}).(\d{6}).(\d{4}).*File name too long.* (\d+\.\d+\.\d+\.\d+):\d+.*/)

I'll break that down in the next section.

Solution

To tackle this, I leaned on a Perl script I originally wrote a decade ago after a real security incident. Back then, hackers exploited a client’s email password, most likely from an Internet cafe they had recently visited, turning my server into a spam relay. That experience prompted me to build a robust monitoring tool in Perl—my go-to language at the time for my day job. This script is a workhorse. It monitors server logs either by invoking the log command or reading log files directly, running checks every 5 minutes (or longer, depending on the log type).

It scans for a laundry list of issues: failed password attempts, non-existent usernames, suspicious program names, unknown email addresses, web server log errors tied to PHP programs, WordPress admin commands, and database access. It tracks IP addresses in a database, flagging patterns like password guesses or command injections. If an IP crosses a threshold of bad behavior, the script blocks it using afctl for a duration that scales with the offense frequency—more attempts = longer bans. It queries AbuseIPDB.com to email the IP owner with incident details. For this Amazonbot issue, I updated the script to detect "file name too long" errors. While the problem stemmed from Amazonbot, I designed the logic to catch any source of such errors, making it a general-purpose fix.

I have a few sections of code where this gets entered.

There is a section for what kind of error I'm looking for and where the error would be extracted from:

file_long => '/var/log/apache2/error_log',

A section mapping these error types to a brief description:

file_long => 'error trying to access an extremely long file path',

A section mapping the error type in my code to the error type at AbuseIPDB.com:

file_long => 19,

Here’s the block of code that strips out the datetime ($dtStr) and IP address ($ipAddr) from the log file:

elsif (($logType eq 'file_long')

&& ($line =~ /(\S{3}).(\d{2}).(\d{2}.\d{2}.\d{2}).(\d{6}).(\d{4}).*File name too long.* (\d+\.\d+\.\d+\.\d+):\d+.*/)) {

$dtStr = sprintf "%04d-%02d-%02d %s.%s", $5, $mapMon{$1}, $2, $3, $4;

$ipAddr = $6;

}

Let's break down that regex now. Here's our very long line with 100+ /files stripped out, since we don't care what the file name was, just the error message indicating it was too long:

[Sat Mar 22 23:59:51.672069 2025] [core:error] [pid 9958] (63)File name too long: [client 44.221.105.234:25481] AH00127: Cannot map GET /services/websites/.../files/image_256-348.png HTTP/1.1 to file

And the regex for what we need looks like this. We'll deal with all the parentheses, but let's ignore them for a moment:

$line =~ /(\S{3}).(\d{2}).(\d{2}.\d{2}.\d{2}).(\d{6}).(\d{4}).*File name too long.* (\d+\.\d+\.\d+\.\d+):\d+.*/)

The single . characters match any character, a space, colon, period, etc.

\S{3} matches three characters, which would be Sat (day of week) and Mar (month of year), but we only want Mar

.\d{2} matches two digits, as in 22, the day of the month. With a single . between \S{3} and \d{2}, that gives us Mar and 22, since Sat is not followed by two digits

Three sets of .\d{2} in a row, which matches the hour, minute and second of the day, 23:59:51

.\d{6} for nanoseconds, 672069

.\d{4} for the year, 2025

'File name too long' after the datetime

\d+.\d+.\d+.\d+ for the IP address, 44.221.105.234, where the + is used to match one or more digits

:\d+ for the port of the IP address, which we want to make sure is there, but don't care about, 25481

So, about all those parentheses. Perl uses them to store the values in temporary variables. Each variable increments from 1 to the number of parentheses in the regex, i.e. $1, $2, $3, etc. In this case, we have 6 sets of parentheses:

$1 = 'Mar'

$2 = 22

$3 = '23:59:51'

$4 = 672069

$5 = 2025

$6 = '44.221.105.234'

We combine variables $1 through $5 into a datetime string formatted as 'YYYY-mm-dd HH:MM:SS.ns', using the year ($5) first, mapping the month ($1) Mar to 3 via a lookup table called mapMon, the day ($2), the HH:MM:SS ($3), and the nanoseconds ($4).

$dtStr = sprintf "%04d-%02d-%02d %s.%d", $5, $mapMon{$1}, $2, $3, $4;

This equates to $dtStr = '2025-03-22 23:59:51.672069'

And the IP address is simply $6, 44.221.105.234, stored in $ipAddr

Meanwhile, I took a second approach: I emailed Amazonbot’s feedback address, attaching relevant log snippets to illustrate the problem. After a few days, I got a reply confirming the issue was resolved, though they didn’t elaborate on their fix. Sure enough, the errors stopped shortly after.

Conclusion

What began as a suspected attack turned out to be a case of a bot behaving badly. By tailing logs with tail -f, analyzing access and error entries, and updating my Perl monitoring script, I brought the situation under control and Amazon removed the issue entirely a couple days later. This experience reinforced the value of real-time log monitoring as a first line of defense—it’s a quick way to spot recurring errors and get a handle on what’s happening. It also showed how a flexible, script-based approach can adapt to new challenges, whether from a rogue crawler like Amazonbot or something else entirely.

Reaching out to Amazon proved worthwhile in a timely manner; even without details on their fix, their response resolved the issue on their end. For fellow server admins and developers, this is a reminder that not every anomaly is malicious—sometimes it’s just a bot being a bot. Keep your tools sharp and your logs close, and you’ll be ready for whatever comes your way.